Rendered vs. DOM and Its SEO Impact

- Zac J.

- Oct 3

- 8 min read

The modern web is a complex ecosystem, and for anyone serious about SEO, understanding how browsers and search engines interact with your content is incredibly important.

Two fundamental concepts often discussed in this context are the "Document Object Model" (DOM) and the "Rendered" version of a webpage. While they sound technical, grasping the difference is necessary for optimizing your site.

What is the DOM?

At its heart, the Document Object Model (DOM) is a programming interface for web documents. It represents the page's structure as a tree of objects, allowing programming languages like JavaScript to access and manipulate its content, structure, and style. Think of it as the raw blueprint of your webpage.

When a browser or a search engine bot first requests a page, the server responds with an HTML file. This initial HTML, along with any embedded CSS and JavaScript, is what the browser uses to construct the DOM.

Initial DOM Construction:

HTML Parsing: The browser reads the HTML file line by line, creating a tree-like representation of all the elements (headings, paragraphs, images, links, etc.). This is the initial DOM.

CSS Object Model (CSSOM): Simultaneously, the browser parses the CSS (both internal and external stylesheets) to understand how the HTML elements should be styled.

JavaScript: Any JavaScript files linked or embedded in the HTML are also downloaded and parsed.

At this stage, before any JavaScript has executed and potentially altered the page, we have the "initial DOM." This is often what a search engine crawler first sees, especially if it's a basic, non-rendering bot.

The above screenshot is from our own website, specifically the "Elements" tab, highlighting the hierarchical structure of the HTML. This visually represents the DOM.

What is the Rendered Page?

The "rendered page," on the other hand, is what you actually see in your browser window. It's the visual output after all HTML has been parsed, CSS has been applied, and crucially, all JavaScript has been executed and made its modifications.

Many modern websites rely heavily on JavaScript to fetch content, create interactive elements, and even build the entire page structure dynamically. When JavaScript runs, it can modify the DOM: adding new elements, removing existing ones, changing attributes, or altering text content. The rendered page reflects the final state of the DOM after all these dynamic changes have taken place.

The Rendering Process:

Initial DOM & CSSOM: As described above, the browser constructs these from the raw HTML and CSS.

Render Tree: The browser combines the DOM and CSSOM to form a render tree, which includes only the elements that will be visible on the page, along with their calculated styles.

Layout (Reflow): The browser calculates the exact position and size of each element on the screen.

Painting: Finally, the browser draws the pixels onto the screen, making the page visible to the user.

JavaScript Execution: Throughout and after these steps, JavaScript executes, potentially altering the DOM, triggering further layout and painting cycles. The final state after JavaScript execution is what defines the rendered page.

The Network Tab provides a clear view of the two phases:

Initial DOM (The Top Request): Note the first document request (the HTML file). This response contains only the initial raw HTML content, often lacking key sections if the site is client-side rendered.

The Rendering Cost (Subsequent Requests): The hundreds of requests that follow (JS, CSS, API calls, and fonts) represent the resources Googlebot must download and execute before the rendered page is complete. The total transfer size and finish time highlight the potential performance and crawl budget cost of dynamic rendering.

Why This Distinction Matters for SEO and Ranking

While older crawlers primarily focused on the raw HTML (akin to the initial DOM), modern bots, like Googlebot, are capable of "rendering" pages much like a modern browser, though there are nuances and potential pitfalls that must be considered.

When Initial DOM and Rendered Page Diverge

The most significant SEO challenge arises when the content critical for ranking is not present in the initial HTML but is injected or modified by JavaScript.

If your primary headings, body text, internal links, or calls to action are added via JavaScript, they might not be immediately visible to a search engine bot that doesn't fully render or has issues rendering your page.

JavaScript execution takes time and resources. If a page is heavily reliant on JavaScript to display content, it can lead to slower loading times. Page speed is a confirmed ranking factor, and a slow render can negatively impact user experience metrics and crawl budget.

If you're not sure what we mean by "crawl budget" its the the number of pages Google and other search engine bots will crawl and the resources they will spend on your site within a given timeframe.

If rendering your pages is computationally expensive due to heavy JavaScript, Googlebot might spend more time and resources on fewer pages, leaving some content undiscovered or updated less frequently.

Google's Rendering Capabilities

Google has publicly stated that Googlebot renders pages using a modern Chromium-based browser. This means it can execute JavaScript and see the rendered DOM. However, this isn't an instantaneous process, nor is it always perfect.

Google often employs a "two-wave" indexing process. The first wave quickly crawls and indexes the initial HTML. The second wave, which can take longer (hours, days, or even weeks), involves rendering the page and indexing the content found after JavaScript execution. If your content is only in the rendered version, there's a delay. This delay can impact the freshness of your content in search results.

For Googlebot to fully render a page, it needs to be able to access all necessary JavaScript, CSS, and API endpoints. If any of these resources are blocked by robots.txt or fail to load due to server issues, Googlebot won't be able to see the fully rendered page.

Errors in your JavaScript can also prevent content from loading correctly, leaving Googlebot with an incomplete or broken rendered page.

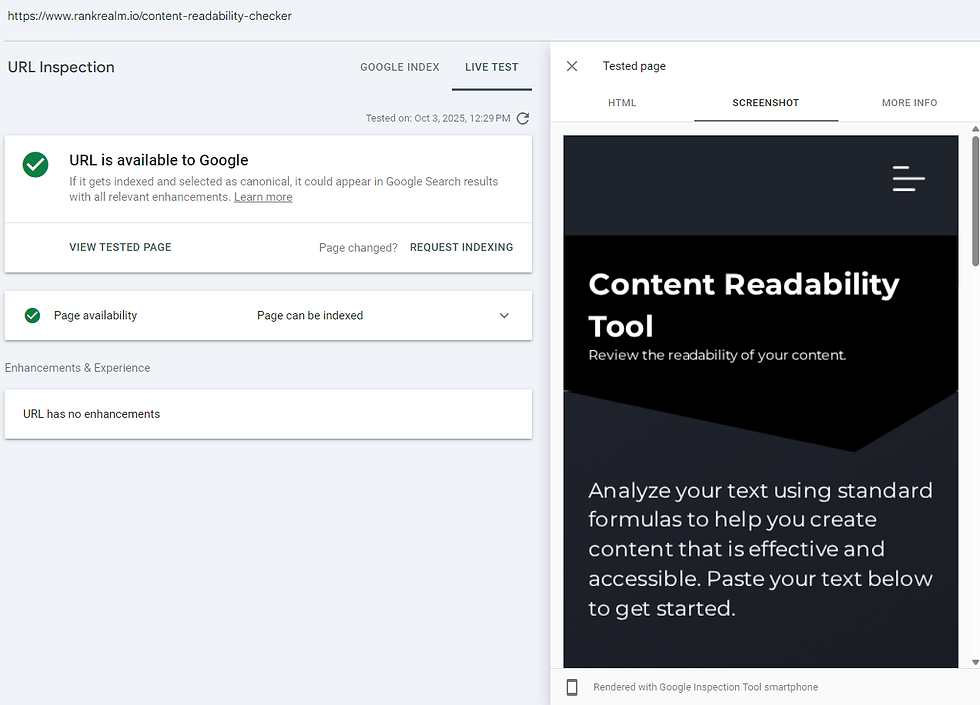

We can see how Google is rendering certain pages by using Google Search Console's "URL Inspection" tool, showing both the "HTML" (initial DOM) and the "Screenshot" (rendered view) tabs for a JavaScript-heavy page.

Best Practices for SEO in a Rendered Web

Here's how you should approach a site that relies heavily on JavaScript and dynamic rendering:

Prioritize Server-Side Rendering (SSR) or Static Site Generation (SSG)

This is essentially about making sure Google sees the important stuff instantly, before the pretty effects kick in.

For core content, SSR or SSG ensures that the full HTML, with all essential content, is sent directly from the server. This means search engines get the complete picture instantly, without needing to execute JavaScript.

Hydration can then be used on the client-side for interactivity. Hydration is the process where the JavaScript runs after the finished HTML is displayed.

Use Dynamic Rendering as a Fallback

If SSR or SSG isn't feasible for your entire site, dynamic rendering can be a solution. This involves serving a pre-rendered, static version of your page to search engine bots, while serving the JavaScript-heavy version to users. This requires careful implementation to avoid cloaking issues, so ensure the content served to bots is substantially the same as what users see.

To communicate this effectively to your development or IT team, specify that you require a dynamic rendering solution (using a headless browser like Puppeteer or Rendertron) that detects the user-agent header for known search engine crawlers (specifically Googlebot).

The critical directive for the team is to guarantee the parity of the content. The DOM generated for Googlebot must match the final, fully-rendered DOM seen by the user. Only the delivery mechanism changes, not the content itself.

Make Sure That Critical Content is in the Initial HTML

Whenever possible, embed your most important content like headings, core body text, meta descriptions, canonical tags, and key internal links, directly within the initial HTML. JavaScript should enhance, not solely create, your critical content.

Verify JavaScript Accessibility

Make sure all your JavaScript, CSS, and API calls are crawlable and indexable. Check your robots.txt to confirm no essential resources are blocked.

To quickly verify that search engines can access the resources needed to render your page, leverage the Google Search Console URL Inspection Tool. Enter the specific URL you are testing and click "Test Live URL." Once the test is complete, click "View Crawled Page" and inspect the "Screenshot" tab to see the visual representation Googlebot generated.

A perfectly rendered screenshot confirms that all necessary JavaScript and CSS files were accessed and executed. You must also check the "More Info" tab, which will list any resources Google was blocked from crawling, often due to restrictions in your robots.txt file or server access issues.

Monitor with Search Console

Regularly use Google Search Console's URL Inspection tool (as mentioned above). Fetch and Render the page. Compare the "HTML" (initial DOM) with the "Screenshot" and the "More Info" tab (which shows console logs and resource errors) on pages with core content.

This is your window into how Googlebot perceives your pages.

Test for JavaScript Impact

Use tools like Lighthouse, PageSpeed Insights, CrUX , and even browser extensions to see how your page performs and what content is visible before JavaScript execution.

Consider disabling JavaScript in your browser to get a raw view. The simplest and fastest way to see the raw, initial HTML is by using the browser's built-in developer tools.

In Chrome or Edge, open the DevTools window (usually by pressing F12 or Ctrl+Shift+I). Then, open the Command Menu by pressing Ctrl+Shift+P (or Cmd+Shift+P on Mac).

Type "Disable JavaScript," select the command, and refresh the page. This instantly switches off all JavaScript execution for that tab, allowing you to see exactly what content is available to the initial phase of a search engine crawl before any dynamic rendering takes place.

Remember to re-enable it or close the DevTools window when finished testing.

Optimize for Speed

For SEO, page speed rests on three primary optimization goals. First, we must "trim the fat" by implementing code splitting and tree-shaking, or to put it more simply - remove unused code and only serve the minimum necessary JavaScript for a specific page.

Second, we should adopt deferring or async loading for non-critical scripts (like social embeds or optional tracking). Communicate to your developers that the goal for these two points is to guarantee that the primary content loads instantly, resulting in a fast Largest Contentful Paint (LCP) score for search engines. This can be measured in Search Console, Lighthouse and other page load speed tools.

The third goal is optimizing the server response time. This directly impacts the Time To First Byte (TTFB), which measures how long the server takes to send the first piece of data.

Inform your IT team that the target is a TTFB below 200ms, stressing that this is a critical infrastructure and caching issue, not just a front-end code problem. Achieving high scores across these three speed initiatives can give us a greater crawl efficiency and a better user experience.

Graceful Degradation

Design your pages so that they are still usable and contain core content even if JavaScript fails or is disabled. This is a good practice for accessibility and ensures a baseline for search engines.

Be Aware, Be Prepared

The distinction between the initial DOM and the rendered page is not just a technicality; it's a critical aspect of modern SEO. While search engines are more capable than ever at rendering JavaScript, relying solely on client-side rendering for important content introduces potential delays and risks.

Your goal is to make it as easy as possible for search engines to find, understand, and index your valuable content. By understanding how rendering works and implementing best practices, you make sure that your site not only looks great to users but also performs optimally.

Have any questions about page load speed optimization? Contact us today and ask one of our experts!

Comments